Cluster Manager Installation#

Prerequisites#

- A minimum of four (4) machines running Ubuntu 18-20, CentOS 7/ RHEL 7 or CentOS 8/ RHEL 8:

- Cluster Manager: One (1) machine with at least 4GB of RAM for cluster manager, which will proxy TCP and HTTP traffic.

- Load Balancer: One (1) machine 4GB of RAM for the Nginx load balancer and Twemproxy. This server is not necessary if you are using your own load balancer and you use Redis Cluster on the Gluu Server installations.

- Gluu Server(s): At least two (2) machines with at least 8GB of RAM for Gluu Servers. SeLinux should be disabled in case of nochroot installation.

- Redis Cache Server (optional): One (1) machine with at least 4GB of RAM if you want to use redis for caching.

Ports#

| Gluu Servers | Description |

|---|---|

| 22 | SSH |

| 443 | HTTPS |

| 30865 | Csync2 |

| 1636 | LDAPS |

| 4444 | LDAP Administration |

| 8989 | LDAP Replication |

| 16379 | Stunnel |

| Load Balancer | Description |

|---|---|

| 22 | SSH |

| 80 | HTTP |

| 443 | HTTPS |

Note

The Load Balancer is the only node that should be externally accessible through 80 and 443 from outside your cluster network.

| Redis Cache Server | Description |

|---|---|

| 16379 | Stunnel |

| Cluster Manager | Description |

|---|---|

| 22 | SSH |

| 1636 | LDAPS |

Port Usage#

-

22 will be used by Cluster Manager to pull logs and make adjustments to the systems

-

80 and 443 are self-explanatory. 443 must be open between the Load Balancer and the Gluu Server

-

1636, 4444 and 8989 are necessary for LDAP usage and replication. These should be open between Gluu Server nodes

-

30865 is the default port for Csync2 file system replication

-

16379 is for securing the caching communication between Gluu servers and Redis Cache Server over Stunnel

Proxy#

If you're behind a proxy, you'll have to configure it inside the container/chroot as well.

Log into each Gluu node and set the HTTP proxy in the container/chroot to your proxy's URL like so:

# /sbin/gluu-serverd login

Gluu.root# vi /etc/yum.conf

insert into the [main] section:

[main]

.

.

proxy=http://proxy.example.org:3128/

Save the file.

The following error will be shown in Cluster Manager if the proxy is not configured properly inside the chroot:

One of the configured repositories failed (Unknown), and yum doesn't have enough cached data to continue... etc.

Could not retrieve mirrorlist http://mirrorlist.centos.org/?release=7&arch=x86_64&repo=updates&infra=stock error was 14: curl#7 - "Failed to connect to 2604:1580:fe02:2::10: Network is unreachable"

Installing Cluster Manager#

Download Cluster Manager#

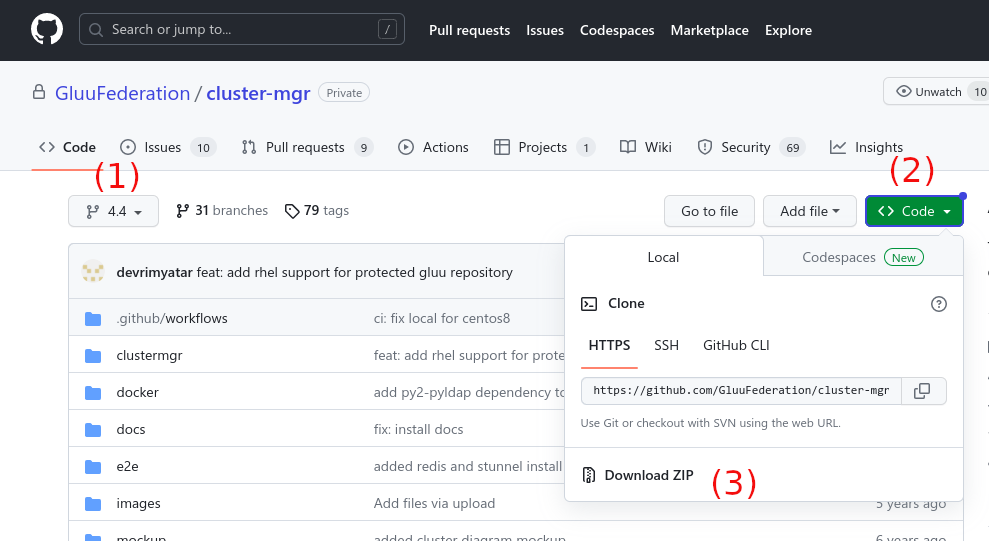

Since Cluster Manager package is protected, download can be done via browser. If you have access to Cluster Manager github repository, navigate to https://github.com/GluuFederation/cluster-mgr

(1) Select branch 4.4

(2) Click on Code button

(3) Click on Download ZIP

Downloaded file should be uploaded to VM on which Cluster Manager will be installed. We uploaded file cluster-mgr-4.4.zip to /root

SSH & Keypairs#

Give Cluster Manager the ability to establish an SSH connection to the servers in the cluster. This includes the NGINX/load-balancing server. A simple key generation example:

ssh-keygen -t rsa -b 4096 -m PEM

- This will initiate a prompt to create a key pair. Cluster Manager must be able to open connections to the servers.

Note

Cluster Manager now works with encrypted keys and will prompt you for the password any time Cluster Manager is restarted.

- Copy the public key (default is

id_rsa.pub) to the/root/.ssh/authorized_keysfile of all servers in the cluster, including the Load Balancer (unless another load-balancing service will be used) and Redis Cache Server. This MUST be the root authorized_keys.

In the following installations Jdk is required for key rotation. If you are not going to use Cluster Manager's key rotation feature, you don't need to install Jdk.

To build Cluster Manager, please refer to building pyz files

Install dependencies#

Install on Ubuntu 20/22#

Note

If your system has python3-blinker package, remove it by sudo apt uninstall python3-blinker since we need newest version and pip will install it.

apt install -y python3-pip openjdk-11-jre-headless

pip3 install --upgrade pip

pip3 install --upgrade setuptools

pip3 install --upgrade psutil

pip3 install --upgrade python3-ldap

If you did not download Cluster Manager package from github and uploaded, please do as explained in section Download Cluster Manager

pip3 install /root/cluster-mgr-4.4.zip

Install on RedHat 7 and CentOS 7#

Install CentOS 7 epel-release:

yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

yum repolist clean

Note

If your Gluu Server nodes will be Red Hat 7, please enable epel-release each node (by repeating above steps) before attempting to install Gluu Server via CM.

Install dependencies:

yum install -y wget which curl python3 python3-pip java-1.8.0-openjdk

pip3 install --upgrade pip

pip3 install --upgrade setuptools

pip3 install --upgrade psutil

pip3 install --upgrade python3-ldap

If you did not download Cluster Manager package from github and uploaded, please do as explained in section Download Cluster Manager

pip3 install /root/cluster-mgr-4.4.zip

There may be a few innocuous warnings, but this is normal.

Install on RedHat 8 and CentOS 8-Stream#

Install epel-release:

yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

yum repolist clean

Note

If your Gluu Server nodes will be Red Hat 8, please enable epel release each node (by repeating above steps) before attempting to install Gluu Server via CM.

Install dependencies:

yum install -y python3 python3-pip java-11-openjdk-headless

pip3 install --upgrade pip

pip3 install --upgrade setuptools

pip3 install --upgrade psutil

pip3 install --upgrade python3-ldap

If you did not download Cluster Manager package from github and uploaded, please do as explained in section Download Cluster Manager

pip3 install /root/cluster-mgr-4.4.zip

Warning

All Cluster Manager commands need to be run as root.

Add Key Generator#

If automated key rotation is required, you'll need to download the keygen.jar. Prepare the OpenID Connect keys generator by using the following commands:

mkdir -p $HOME/.clustermgr4/javalibs

wget https://maven.gluu.org/maven/org/gluu/oxauth-client/<Grab_your_Gluu_Server_version_repo_link>/oxauth-client-4.x.x.Final-jar-with-dependencies.jar -O $HOME/.clustermgr4/javalibs/keygen.jar

Automated key rotation can be configured inside the Cluster Manager UI.

Stop/Start/Restart Cluster Manager#

The following commands will stop/start/restart all the components of Cluster Manager:

clustermgr4-cli stopclustermgr4-cli startclustermgr4-cli restart

Note

All the Cluster Manager logs can be found in the $HOME/.clustermgr4/logs directory

Create Credentials#

When Cluster Manager is run for the first time, it will prompt for creation of an admin username and password. This creates an authentication config file at $HOME/.clustermgr4/auth.ini.

Create New User#

We recommend creating an additional "cluster" user, other than the one used to install and configure Cluster Manager.

This is a basic security precaution, due to the fact that the user SSHing into this server has unfettered access to every server connected to Cluster Manager. By using a separate user, which will still be able to connect to localhost:5000, an administrator can give an operator limited access to a server, while still being able to take full control of Cluster Manager.

ssh -L 5000:localhost:5000 cluster@<server>

Log In#

Navigate to the Cluster Manager web GUI on your local machine:

http://localhost:5000/

Deploy Clusters#

Next, move on to deploy the Gluu cluster.

Uninstallation#

To uninstall Cluster Manager, simply:

pip3 uninstall clustermgr4